Taking the AI training wheels off: moving from PoC to production

In helping dozens of organizations build on-premises AI initiatives, we have seen three fundamental stages organizations go through on their journey to enterprise-scale AI.

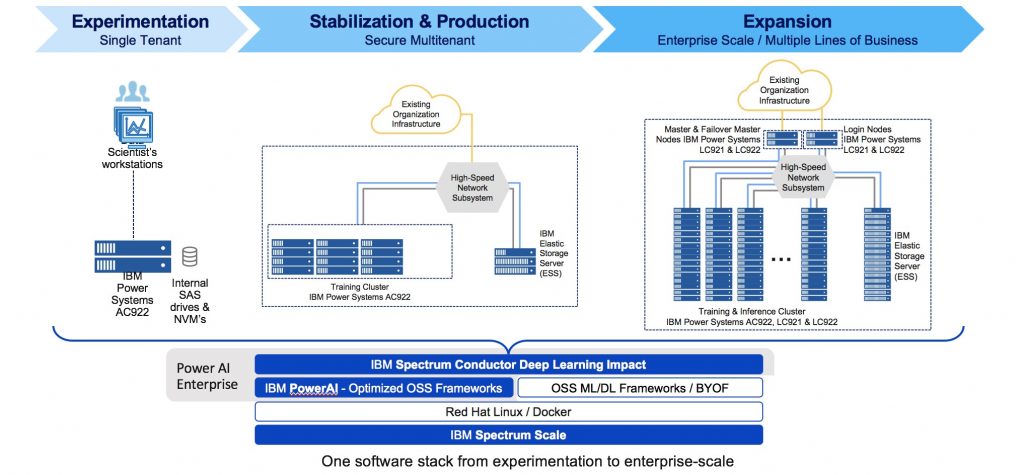

First, individual data scientists experiment on proof of concept projects which may be promising. These PoCs then often hit knowledge, data management and infrastructure performance obstacles that keep them from proceeding to the second stage to deliver optimized and trained models quickly enough to deliver value to the organization. Moving to the third and final stage of AI adoption, where AI is integrated across multiple lines of business and requires enterprise-scale infrastructure, presents significant integration, security and support challenges.

Today IBM introduced IBM PowerAI Enterprise and an on-premises AI infrastructure reference architecture to help organizations jump-start AI and deep learning projects, and to remove the obstacles to moving from experimentation to production and ultimately to enterprise-scale AI.

On-premises AI infrastructure reference architecture

AI and deep learning are sophisticated areas of data analytics, which is rapidly changing. Not many people have the extensive knowledge and experience needed to implement a solution (at least not today).

To help fill this knowledge gap, IBM has built PowerAI Enterprise – easy-to-use, integrated tools to get AI open source frameworks up and running quickly. These tools utilize cognitive algorithms and automation to dramatically increase the productivity of data scientists throughout the AI workflow. This tested, validated and optimized AI reference architecture includes GPU-accelerated servers purposely built for AI. There is also a scalable storage infrastructure that not only cost-effectively handles the volume of data needed for AI, but also delivers the performance needed to keep data-hungry GPUs busy all of the time.

Ritu Joyti, Vice President of IDC’s Cloud IaaS, Enterprise Storage and Server analyst, noted “IBM has one of the most comprehensive AI solution stacks that includes tools and software for all the critical personas of AI deployments including the data scientists. Their solution helps reduce the complexity of AI deployments and help organizations improve productivity and efficiency, lower acquisition and support costs, and accelerate adoption of AI.”

One customer which has successfully navigated the new world of AI is Wells Fargo, as they use deep learning models to comply with a critical financial validation process. Their data scientists build, enhance, and validate hundreds of models each day and speed is critical, as well as scalability, as they deal with greater amounts of data and more complicated models. As Richard Liu, Quantitative Analytics manager at Wells Fargo said at IBM Think, “Academically, people talk about fancy algorithms. But in real life, how efficiently the models run in distributed environments is critical.” Wells Fargo uses IBM AI Enterprise software platform for the speed and resource scheduling and management functionality it provides. “IBM is a very good partner and we are very pleased with their solution,” added Liu.

When a large Canadian financial institution wanted to build an AI Center of Competency for 35 data scientists to help identify fraud, minimize risk, and increase customer satisfaction, they turned to IBM. By deploying the IBM Systems AI Infrastructure Reference Architecture, they now provide distributed deep learning as a service designed to enable easy-to-deploy, unique environments for each data scientist across shared resources.

Get started quickly

PowerAI Enterprise shortcuts the time to get up and running with an AI environment that supports the data scientist from data ingest and preparation, through training and optimization and finally to testing and inference. Included are fully compiled and ready-to-use IBM-optimized versions of popular open source deep learning frameworks (including TensorFlow and IBM Caffe), as well as a software framework designed to support distributed deep learning and scale to 100 and 1000 of nodes. The whole solution comes with support from IBM, including the open source frameworks.

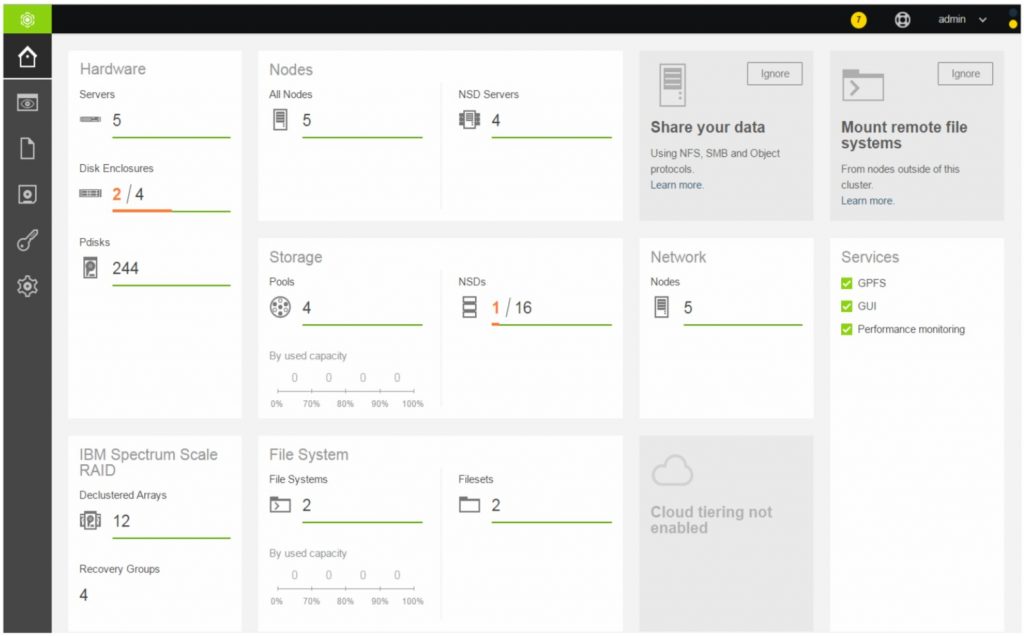

The IBM Systems AI Infrastructure Reference Architecture is built on IBM Power System servers and IBM Elastic Storage Server (ESS), with a software stack that includes IBM PowerAI Enterprise and IBM’s award-winning Spectrum Scale. IBM PowerAI Enterprise installs full versions IBM PowerAI base, IBM Spectrum Conductor and IBM Spectrum Conductor Deep Learning Impact.

Learn more about the IBM Systems AI Infrastructure Reference Architecture and IDC’s review of the architecture here.

IBM PowerAI Enterprise

IBM PowerAI Enterprise extends all of the capability we have been packing into our distribution of deep learning and machine learning frameworks, PowerAI, by adding tools which span the entire model development workflow. With these capabilities customers can develop better models more quickly, and as their requirements grow, efficiently scale and share data science infrastructure.

To shorten data preparation and transformation time, PowerAI Enterprise integrates a structured, template-based approach to building and transforming data sets. It also includes powerful model setup tools designed to eliminate the earliest “dead end” training runs. By instrumenting the training process, Power AI enterprise allows a data scientist to see feedback in real time on the training cycle, eliminate potentially wasted time and speed time to accuracy.

Bringing these and other capabilities together accelerates development for data scientists, and the combination of automating the workflow and extending the capabilities of open source frameworks unlocks the hidden value in organizational data. Learn more about IBM PowerAI Enterprise here.

The post Taking the AI training wheels off: moving from PoC to production appeared first on IBM IT Infrastructure Blog.